Key considerations for using big data analytics and artificial intelligence in Hong Kong’s banking industry

Late last year, the Hong Kong Monetary Authority (HKMA) issued two closely related circulars that, in broad strokes, set up guiding principles on the use of big data analytics and artificial intelligence (BDAI) and more specifically principles on AI in the banking industry.

Building on these principles, banks are taking a fresh look at how they are approaching this evolving topic. Below, we lay out some of our observations and things that institutions need to think about.

HKMA’s circular on Consumer Protection in respect of Use of Big Data Analytics and Artificial Intelligence by Authorized Institutions1 provides a number of guiding principles around what financial institutions need to consider when building BDAI platforms, harvesting data and interacting with their clients. The guidelines are focused on the public at large and touch on key aspects such as the governance and accountability, explainability, fairness, consistency, ethics and transparency of the use of BDAI.

Taking into account some of the well-publicised challenges that financial institutions around the world have had to deal with in regards to adopting BDAI, a greater level of understanding needs to be embedded into the use of these powerful technologies.

Proper validation, which can be interpreted as comprehensive testing, is one of the steps the HKMA recommends before the launch of BDAI applications as machine learning enables systems to be better informed and potentially facilitates decision-making. However, management at financial institutions needs to be aware of what these new decisions might be.

A case in point is the adoption of robo-advisors. Like human advisors, these AI-enabled advisors need to understand the financial capabilities of the customers they are dealing with to provide appropriate advice. Therefore, organisations need to make sure that they apply appropriate levels of oversight throughout the three lines of defence to best look after their clients. Organisations also need to think long and hard about how to translate that oversight into the virtual world. For instance, they should consider strategies around continuity, monitoring and control; contingency plans in case of emergency; etc.

Another issue of concern is the public’s potential apprehension towards AI and how that can make people more critical towards the technology. To address this, banks need to think about how they explain the mechanics of the value added by AI and enhancing AI-driven services. Transparency around how they pull information together, what they do with data and how they do it can help boost customer confidence.

One of the key points in HKMA’s circular on High-level Principles on Artificial Intelligence2 is that the board and senior management are accountable for the outcomes of their organisation’s AI applications. This means that banks need to ensure that they fully understand what the outcomes of their AI applications are as these technologies are making automated decisions on behalf of the organisation. On the face of it this is very broad and a little daunting, so the practical considerations of compliance needs to be critically thought through.

One point to note here is the importance of having a thorough understanding and good control over data – an algorithm may have been written the right way, but if the wrong data is fed into it, the results are going to be different. And if the banks are not aware of the poor quality or poor integrity of data, they are unlikely to recognise discrepancies between outcomes and see the effects of unintentional biases.

Additionally, institutions have to be able to explain AI-powered decisions to all relevant parties. The black-box excuse is no longer usable. This is likely to have an impact on how quickly products are rolled out because organisations would want to avoid scenarios where they have to explain why certain decisions did not work out. This also means that the journey of an AI use case from PoC or MVP to being production ready is critical. The ability to show how this was managed and tested at each stage is also going to be required, as well as being able to demonstrate that appropriate governance frameworks are in place. This is a problem as many institutions work with AI partners and Fintechs or have capable AI teams who build cutting-edge solutions. But making them enterprise ready is a different capability, and this is where things break down.

This makes the topic of having a sound governance framework for AI an imperative. But the reality is that the development of AI applications, at least in concept, is well ahead of the governance agreed to monitor and control them. Although some banks have established professional teams to take care of the governance oversight for AI, it is not nearly as mature as the applications themselves. Some AI solutions may have made their way into production with banks not necessarily having in place the same kind of rigor and control over them as they do over regular software development cycles.

What do institutions need to do?

A product development-centric mindset coupled with the HKMA’s principles will create an interesting new dynamic that many institutions have not necessarily adopted yet. Banks need to start adopting and understanding how technology companies work in product development, so that they can, in an agile way, update, manage and change any algorithms they are developing.

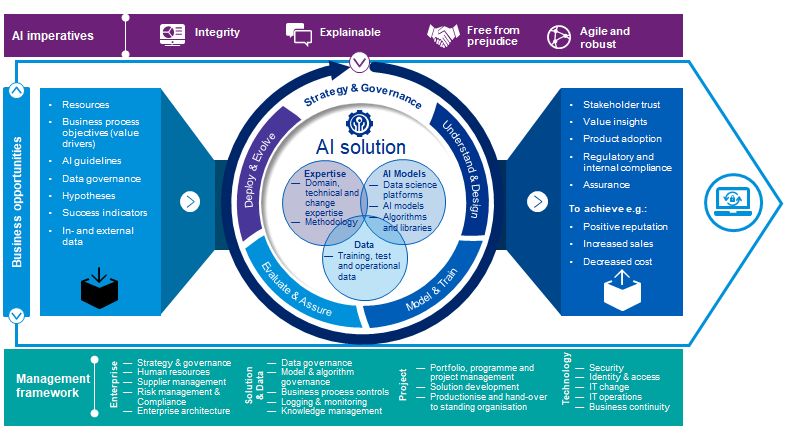

KPMG AI in Control

KPMG works with clients using its “AI in Control” framework and tools. “AI in Control” is KPMG’s approach to keep AI honest and to address the risks and challenges brought by the bias of AI built into the algorithms that are built by individual developers.

Connect with us

- Find office locations kpmg.findOfficeLocations

- kpmg.emailUs

- Social media @ KPMG kpmg.socialMedia